Data is driving most of our modern-age businesses. Businesses draw massive data from several applications for business analytics and better decisions.You will need a data warehouse or lake to draw data from business applications. Yet, data warehouses only receive data in set frequency and are hardly up-to-date.

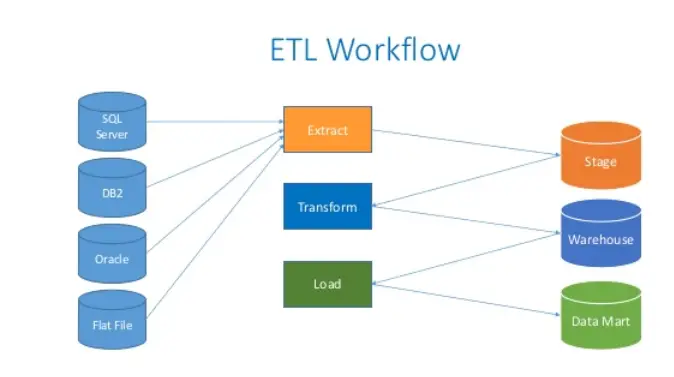

The data warehouse is populated through data integration processes-extraction, transformation, and loading (ETL).

Data warehouses should reflect any changes in the transactional databases feeding into it. Change data capture replicates the changes in the data’s final destination.

Capturing data changes and loading them to a data warehouse is time-consuming. ETL tools can handle CDC processing, but performance can fall when the data grows. But, there are ways of optimizing the process.

Read along to understand how you can optimize your ELT process using CDC.

Read: Business Data Analytics: What You Need to Know

What is Change Data Capture (CDC)

Change data capture is a way of capturing changes in databases into a data warehouse. CDC involves tools that can detect database changes and replicate them.

Data replication is vital for real-time data analytics. CDC can drive incremental data loading near real-time. It allows databases and data warehouses to accommodate any CDC event actions.

So, CDC is a data integration technique for a high-velocity data stream. It offers scalable, reliable, and low latency data replication with few computation resources.

The legacy Change Data Capture tools have limited support to new database technologies. Also, most of them do not have comprehensive enterprise use cases. But, using modern CDC in ETL offers robust performance for real-time data applications.

Your business applications like business intelligence can now run without limitations. Modern data integration platforms use APIs to track any change from any source. They transform the data and stream the changes to central data repositories.

Read: Protecting User Data In Your Mobile App: 6 Things To Consider

How CDC Works

Source: Wikimedia Commons

For every update within the source database, you may need a reflection of the change. The associated resource like the search index should reflect the changes too. It is critical, especially to relational databases like MySQL, Oracle, PostgreSQL, etc.

SQL Change Data Capture, Oracle CDC, and others cut dual writes. They also update resources accurately and simultaneously.

There is no need to copy the entire database for analytics with CDC. You only need to track and capture the changes within the source and destination targets. The changes include “update,” “insert,” and “delete” events. They are replicated in other systems relying on similar data.

Detecting, capturing, and moving altered data sets cuts data integration time. That gives you more capability to perform frequent smaller data integration tasks.

So, CDC reduces the required resources for data warehousing. It also drives continuous data replication and integration.

For any data changes in any data set, CDC will enable other linked databases to reflect similar changes. So, systems will trigger events or refresh their data version accordingly.

Read: Switching between different Linux distributions without losing data

How to Optimize ETL Using CDC

ETL tools extract data, enrich it while on transit, and load it into the target destination of choice e.g data lake.

Using CDC in the ETL process offers a new approach to moving data from source to target. CDC places change data into a data pipeline for better data transfer efficiency. It can either be in batch or real-time.

There are several CDC processing methods:

- Time-Stamped Technique: Use source’s timestamps to mark and extract altered data sets.

- Trigger-Based Technique: It needs database triggers for any data changes in the source system. It replicates the changes in the destination target.

- Snapshot Technique: It gets a full data extraction at the staging process from the target. A source snapshot is taken and compared to the original one to spot changes.

- Log-Based Technique: Database has log files that track every change. Also, they have time stamps and other unique identifiers. The CDC method relies on log file information to perform CDC processes. The technique applies in several use cases, including high transaction volume scenarios.

You can leverage CDC to optimize your ETL in a couple of ways:

Use Single Data Integration Platform for Data Replication and Event Processing

Modern CDC solutions use a single platform for CDC data ingestion and replication. It allows your data analytics teams to visualize tasks for enhanced ETL productivity.

Also, it reduces resource usage and requirements. It brings data ingestion, CDC integration, and event processing into a unified platform. It makes the ETL process more efficient.

Read: Is Coding Easier Today Than It Was 10-15 Years Ago

Execute Complex Flows and Tasks With Zero Coding

Also, CDC makes it easy to execute bulky, complex flows and tasks without writing CDC scripts.

Software engineers can easily set up database triggers to capture data changes. Writing CDC scripts takes time and improper code can cause misrecording or data non-capture.

Modern CDC solutions can handle complex tasks without the need for error-prone code. With a mouse click and an intuitive interface, you can process complex flows. Also, you can replicate bulk data sets, and adjust targets.

Robust Data Transformation and Enrichment for Any File Format in Real-Time

Source: Piqsels

Central repositories like data warehouses and lakes handle data from different sources and formats.

- JSON from social media, APIs, etc

- Log files with network data

- RDBMS from CRMs

- XML from third parties like the point of sale data, etc.

It can be challenging to have all these data formats in central repositories. MapReduce-dependent ETL processes are standard in diverse file format handling. But, they can easily fail in high data volume scenarios and need custom execution for each file format.

Read: A brief guide to testing APIs and the tools involved

Traditional ETL tools do not handle batch updates and complex schemas effectively. For instance, they can not reference data elements spread across several database tables.

Modern CDC solutions stream data from any source in any format. Also, they can provide in-flight data correlation. Other data enrichment processes like standardization can be performed. All these can be done in batch or real-time as your need commands.

Modern optimized CDC in ETL process has several benefits as enlisted below.

Benefits of CDC in ELT Process

Reduce Resource Requirements

CDC reduces the amount of data going for ETL processes as it only focuses on CDC events. Fewer data to process translates to faster processing. So, it will save time and be less resource-intensive leading to efficiency and cost gains.

Supports Continuous Integration

The traditional ETL process uses to run big data integration tasks, one at a time. It was normally done at night, to reduce the impact on users. It could take the whole night, limiting the running of simultaneous tasks.

But, the CDC in the ETL process makes running smaller data integration tasks easier. You can also run the tasks at a higher frequency. It makes it easier to get real-time data for analysis and business intelligence.

Facilitates Data Duplication

CDC in ETL makes data replication strategy easy to plan. Particularly for high availability or disaster recovery scenarios.

Data replication is suitable if you need a test, production, or backup database. And CDC in ETL tools leads to faster replication, updating, and syncing your databases.

Read: 7 Tips to create a database for beginners

Transactional Analysis

Integrating CDC in your ETL process allows conducting batch monitoring and data analysis. For example, you can use it to check if a debit card is being used in different locations simultaneously.

Take Away

There is high demand for real-time data streaming and associated infrastructure. Increasing high volume and velocity data overstretching the ineffective traditional systems.

Leveraging a new approach using CDC to optimize the ETL process comes in handy. It drives robust and scalable data integration to support current data streaming demands.

No more worries about complex data from diverse sources. They can be easily ingested, transformed, and loaded in real-time. All these are doable with little overhead and resources.

If you like the content, we would appreciate your support by buying us a coffee. Thank you so much for your visit and support.